Dr Stephen Redmond is an Insight Funded Investigator and an Associate Professor at the School of Electrical and Electronic Engineering at UCD. One of his research streams focuses on the topic of tactile sensing, spanning from the study of human tactile physiology, through to the development of friction-sensing tactile sensors for robotic grippers. More recently, his group has been interested in the use of deep neural networks for use with reinforcement learning to enable autonomous dexterous robotic manipulation among other things. He explains more about this work below:

“As robots move into unstructured environments, such as homes, hospitals, and warehouses, they will require an artificial intelligence that allows them to quickly self-learn and self-supervise during skilful robotic movement and dexterous object manipulation. Human action in the world, such as walking and manipulating things with our hands, is largely effortless and yet exceptionally skilful; this is because our movements are often predictively planned and controlled so that consequences of our actions are anticipated before we move. It is believed that the predictions our brain makes are achieved using rich internal representations (i.e., mental models of the world), where the mind internally represents the scene (i.e., the object and environment) and “imagines” or simulates the action in this model of the world. We can see the world in our mind’s eye!

world, such as walking and manipulating things with our hands, is largely effortless and yet exceptionally skilful; this is because our movements are often predictively planned and controlled so that consequences of our actions are anticipated before we move. It is believed that the predictions our brain makes are achieved using rich internal representations (i.e., mental models of the world), where the mind internally represents the scene (i.e., the object and environment) and “imagines” or simulates the action in this model of the world. We can see the world in our mind’s eye!

“Robots are not very smart right now, but we are rapidly making them smarter. State-of-the-art robotic systems rely on intelligence driven by reinforcement learning, where the next action to be taken is rote learned from a very large number of attempts at a task, with punishments given when an attempt fails and rewards given when an attempt succeeds, which helps learn which actions are worth taking in a given situation. The knowledge learned through this process is stored in the weights and biases of a deep neural network. But, to repeat, the actions are effectively rote learned. There is not much intelligence at work here. If the task changes or something unexpected happens, the robot will usually perform very badly in the new situation.

“In the literature, some steps have already been taken in the direction of developing systems that can internally represent their world, predict the outcomes of their actions, and plan accordingly; that is, have a sort of artificial imagination! However, this approach has mostly been applied to video game play, and not so much to robotics. This is what we are working on at SFI Insight, in a collaboration between researchers at UCD and DCU, to enable robots to create internal representations so they can plan and act with greater intelligence.

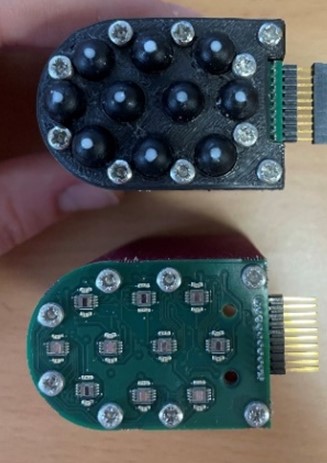

“However, one important element that robots lack that would help them perform better in manipulation tasks is a sense of touch; could you easily tie your shoe laces after playing in the snow until your fingers were numb? In an SFI-funded project at UCD, my team has been  developing the next generation of tactile sensors. These sensors can be added to the finger pads and palms of the robot’s hands, to its feet, or to other parts of its body, to allow it to interact with the world in a more controlled way, and importantly to learn from these interactions. You can see a demo of a single tactile sensing prototype here. We can make arrays of these sensors to form fingers for robotic grippers, like that shown in the photo, which shows a robot finger with ten tactile sensing elements. We continue to make these sensors smaller so they can be seemlessly arranged to make a sensitive robot skin, and may be useful for prosthetic hands too.

developing the next generation of tactile sensors. These sensors can be added to the finger pads and palms of the robot’s hands, to its feet, or to other parts of its body, to allow it to interact with the world in a more controlled way, and importantly to learn from these interactions. You can see a demo of a single tactile sensing prototype here. We can make arrays of these sensors to form fingers for robotic grippers, like that shown in the photo, which shows a robot finger with ten tactile sensing elements. We continue to make these sensors smaller so they can be seemlessly arranged to make a sensitive robot skin, and may be useful for prosthetic hands too.

“Endowed with an exquisite sense of a touch and an artificial intelligence inspired by the human brain that can use its own imagination to plan and take better actions, robots will soon be able to help us with dull, dangerous, and dirty work that we would rather nobody have to do. Dexterous robots can support our supply chains, agriculture industry, construction work, aged care and health services, and probably many applications we have yet to imagine.

“Until now, robots have been out of touch with the real world. With a sense of touch and a bioinspired brain, we can expect much smarter and more helpful robots in the coming years.”